How I built it

Why custom geometry reconstruction?

Lens Studio has a built-in World Mesh, a 3D structure reconstructed from a LiDAR sensor or ML depth estimation from device cameras, that represents real-world geometry. However, it has a disadvantage: it starts working only after 2−3 seconds after lens launch and slowly appears piece by piece, which doesn’t look perfect. And when a user walks, they still have to wait until new parts of the world mesh are processed and displayed.

So I decided to build a custom geometry reconstruction using only a pure depth map. Here how the whole process looks like:

It’s less stable than built-in LiDAR world mesh, but has a big advantage: it’s instant, so users don’t have to wait for processing and can see the effect immediately. Here is word mesh in comparison with my depth geometry reconstruction approach:

Triplanar Mapping

To texture the world, two data objects are required: world geometry and world normals. Both are made using OpenGL shaders inside Lens Studio.

- In a texture with World Geometry, every pixel represents a 3D position in the world relative to the initial phone position (where the lens was launched). It considers phone movement and rotation.

- In a texture with World Normals, every pixel represents a surface orientation, which is required for Triplanar Mapping, a technique to texture something, based on if it’s a “floor”, “wall”, “ceiling”, and so on.

Both data vectors are generated just from a black&white depth map.

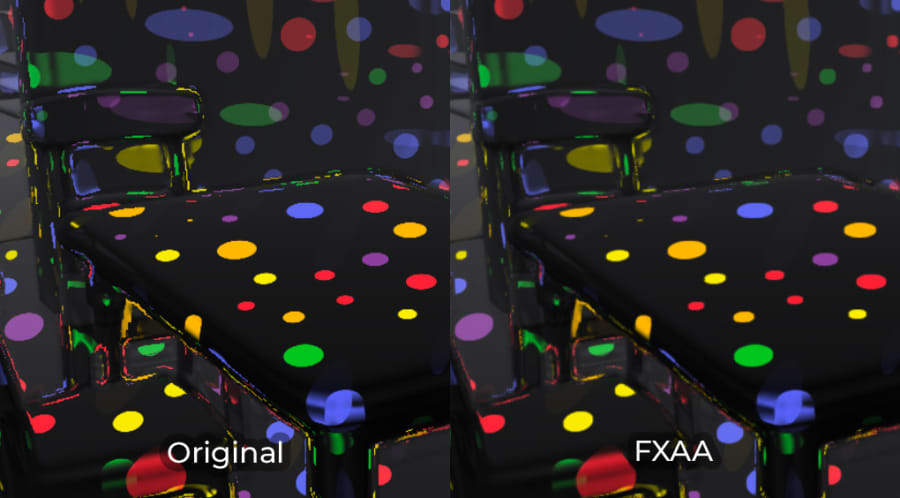

Antialiasing

I was not satisfied with the built-in antialiasing, so made my own version of Fast Approximate Anti-Aliasing (FXAA) to make the image look smoother:

SSAO

Once the geometry is reconstructed, Screen Space Ambient Occlusion (SSAO) becomes possible. Basically, it checks how much geometry is around and tries to approximate indirect lighting by darkening creases, holes, and surfaces that are close to each other, like it happens in the real world.

That was hard!

Actually, this is the first time when I put my hand on Lens Studio Code Node! This node is a part of shader graph that allows writing a classic OpenGL ES code, which makes complex shaders such as SSAO possible.

The biggest challenge was precision: Lens Studio doesn’t have a half-float (high precision) render targets, so some hacks such as splitting and packing a data vector in three different render targets were used.

The whole shader code took about 1000 lines. Finally, I managed:

- Fast real-time geometry and normals reconstriction.

- Fast real-time SSAO for a more natural look.